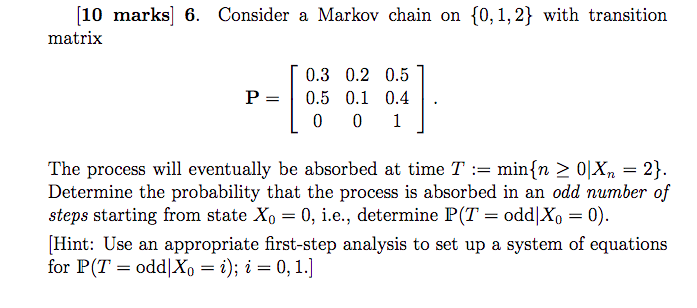

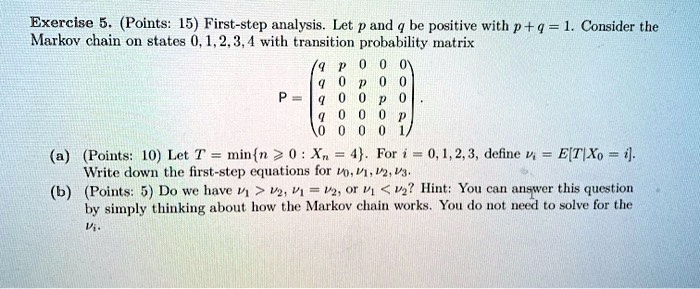

SOLVED: Exercise 5 (Points: [5) First-step analysis Let p and be positive with p+q = 1. Consider the Markov chain On states 0,1,2.3,4 with transition probability matrix (2) (Points: [0) Let T =

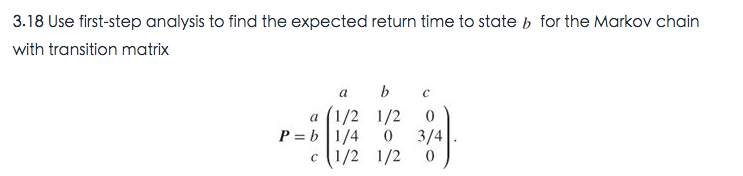

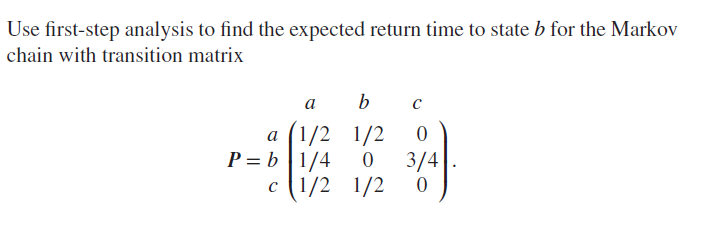

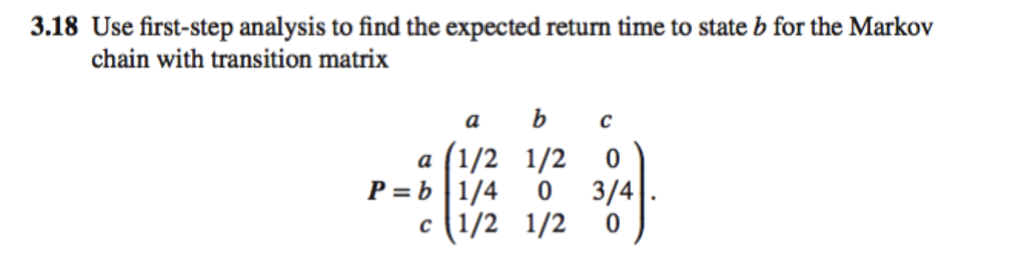

Use the first-step analysis to find the expected return time to state b for the Markov chain with transition matrix | Homework.Study.com

Age-Dependent Transition from Cell-Level to Population-Level Control in Murine Intestinal Homeostasis Revealed by Coalescence Analysis | PLOS Genetics

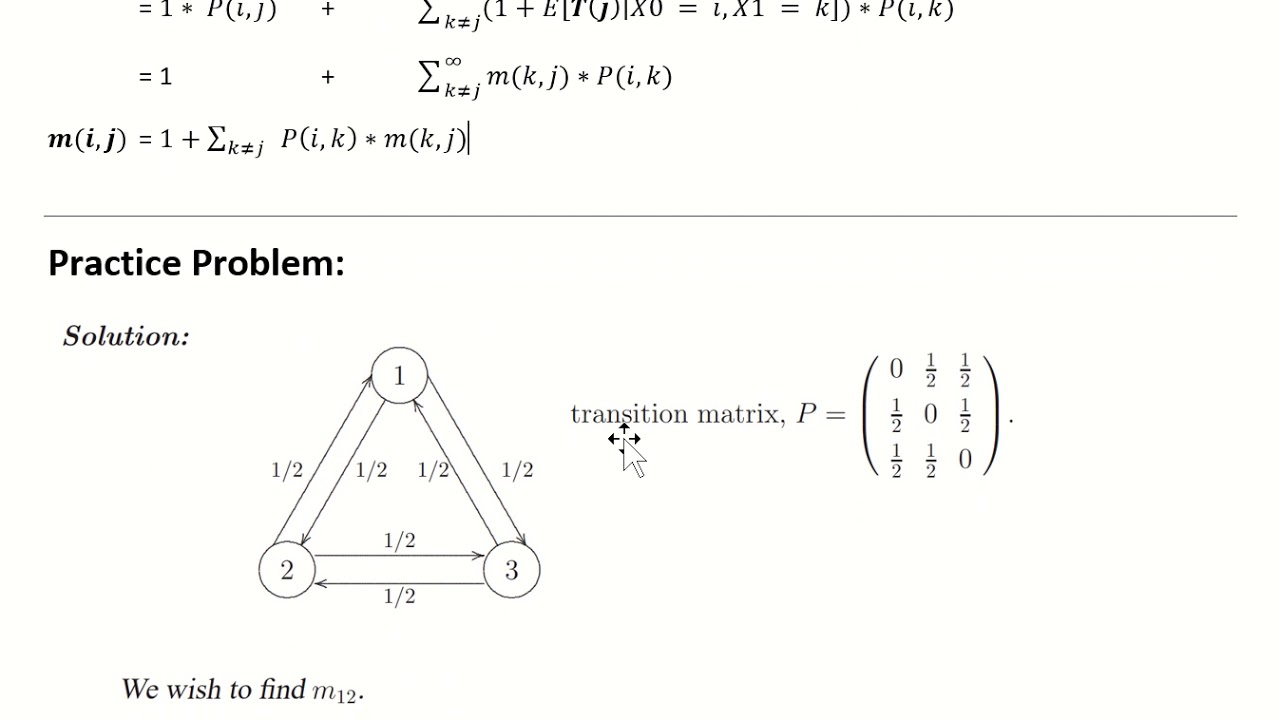

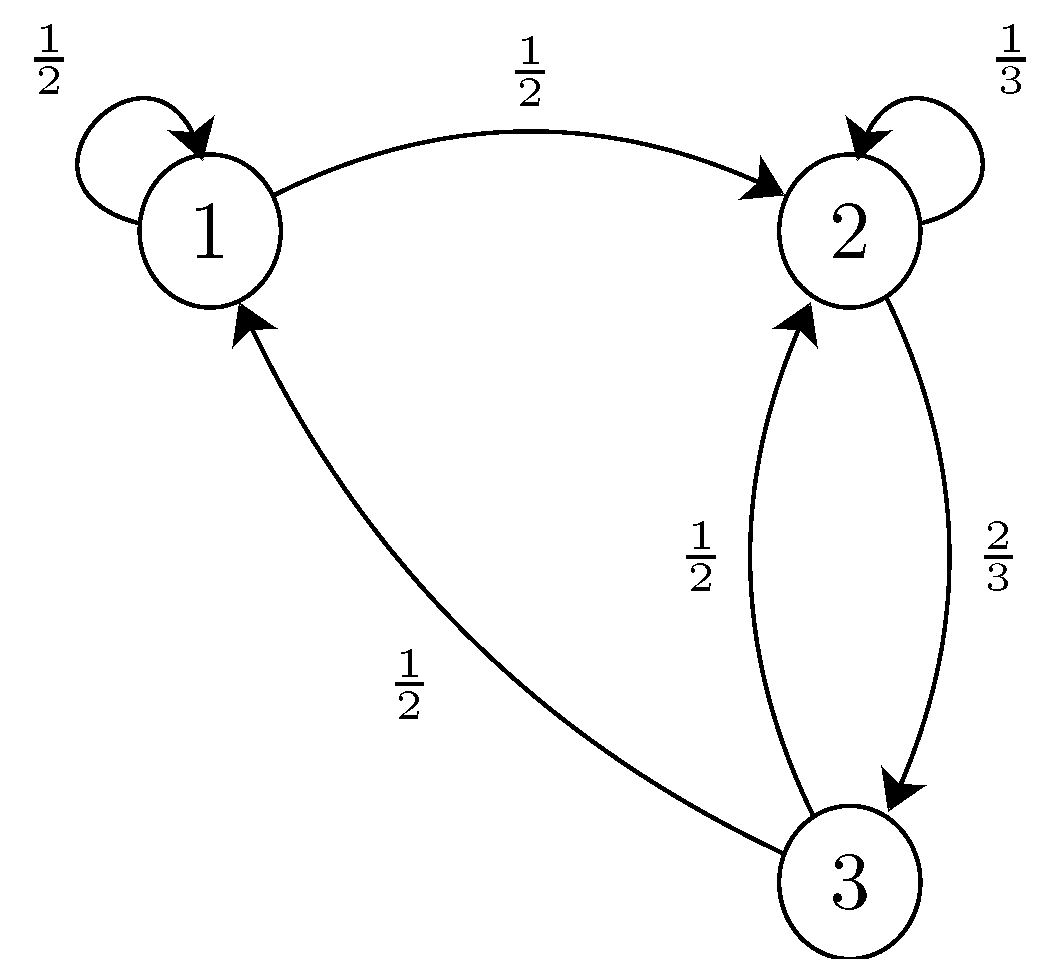

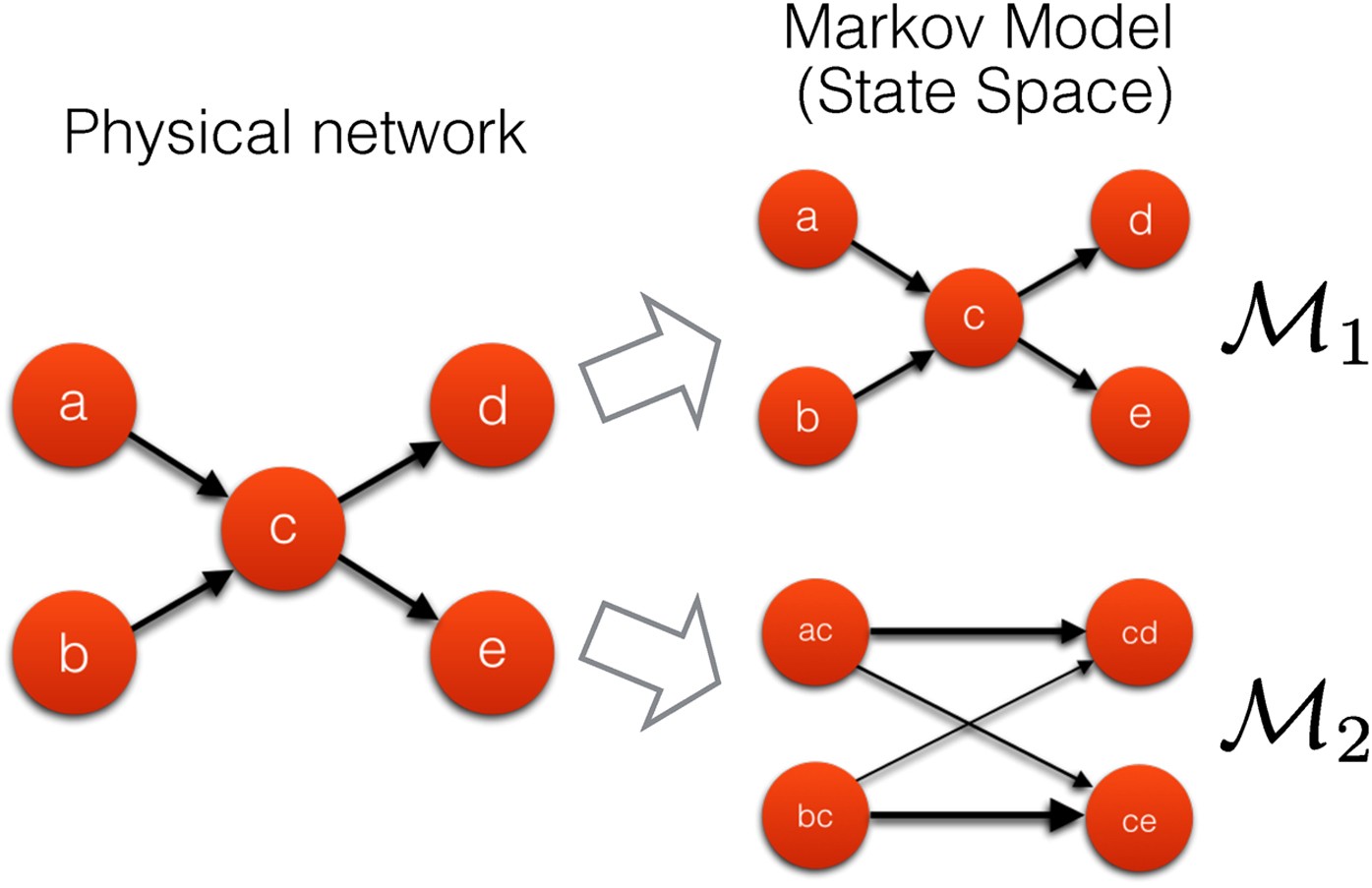

Markov Chains - First Step Analysis - First Step Analysis of Markov Chains Chapter 3.4 of textbook 1 Simple First Step Analysis The Markov Chain cfw Xn | Course Hero

APPM 5560 Markov Chains Fall 2019 Exam One, Take Home Part Due Monday, March 4th Welcome to the take-home part of exam I. This i

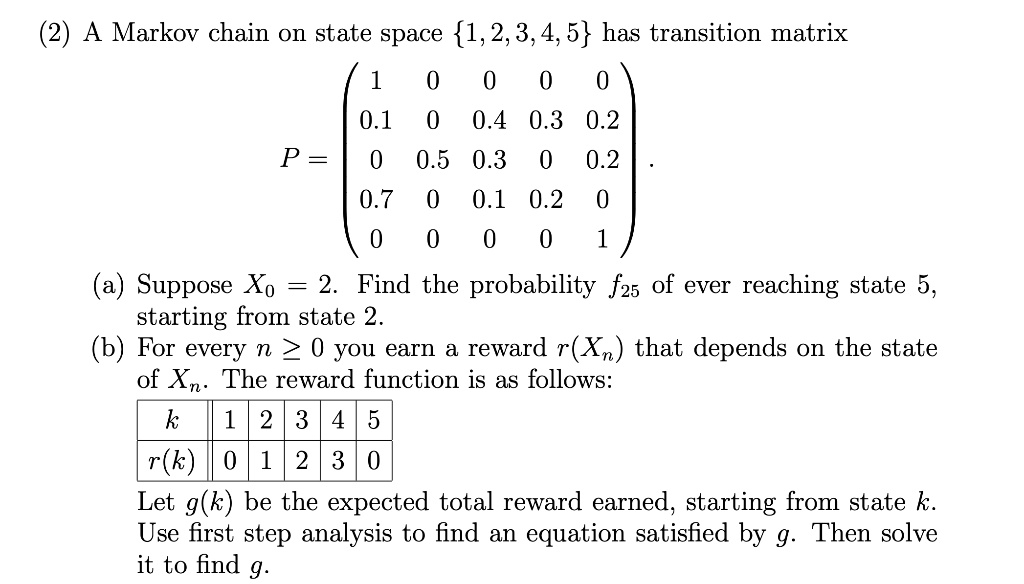

SOLVED: (2) A Markov chain on state space 1,2,3,4,5 has transition matrix 0.1 0.4 0.3 0.2 P = 0.5 0.3 0 0.2 0 0.1 0.2 0 (a) Suppose Xo = 2 Find